We are spiritual beings having a physical experience. The purpose of incarnating is simply to give us the unique experiences we need for our progressive evolution toward perfection. Within each of our Earthly experiences, there are important lessons we’ve chosen to learn. On a subconscious level, we are attracting the people and experiences that will teach us these lessons.

You can heighten your awareness to help you recognize and embrace the synchronistic opportunities that are always presenting themselves in the form of people, places and events around you. First of all, commit to engaging in the reality that surrounds you, which might also mean turning off devices. You must also make an effort to disengage from the flurry of thoughts swirling through your head that distract you from fully appreciating your surroundings. A powerful practice I use is to say out loud or in my head, “Moment!” This immediately draws my attention back into the present, and I can more effectively engage with the world around me.

A scientific concept that reinforces the importance of our engagement with others is the quantum theory of entanglement. This theory states that whenever we exchange energy with another living being, that energetic connection will remain intact for all time. This means that every interaction you have with another living being will remain forever imprinted on both of you.

With this in mind, ask yourself: “What type of karmic imprint do I want to leave on myself and others throughout the day?” and “How can I improve the quality of the energetic connections I am making?”

Consider that our paths are predestined. We have come here on Earth to learn the life lessons that will allow us to progress on our paths. As such, we naturally create the experiences that are most likely to help us learn and grow. We attract the people, places and things that are most conducive to our soul’s evolution. Spiritual guides may also place certain people in our path to assist us on our journey. The key to recognizing these people and places as opportunities to learn and grow is to continually search for the deeper meaning of our interactions with them.

We have to ask ourselves questions like, “Why have I been placed in this particular location at this particular time, and how is this situation conducive to my growth?” We also have to explore relationships on a deeper level by asking ourselves, “Why have I been connected with this person and how can we benefit each other?” and “What lessons can we learn from each other?” By making a sincere effort to uncover the meaning behind our everyday experiences and interactions, we can reveal their higher purpose and learn to go with the flow.

Here are three powerful ways to remain open to the synchronistic flow of life’s stream:

- Use the practice of saying, “Moment!” whenever you notice that you have become disconnected from the present moment.

- Be aware of the karmic imprint you are leaving on yourself and others with every reaction and interaction.

- Recognize the people, places and things you have attracted into your life all represent opportunities to learn the lessons that are most conducive to your evolutionary path.

After I began to recognize the divinity within the experiences of my life, I developed a strong faith that everything happens just as it should and for a good reason. Trusting in the divine plan has made me feel much more at peace with the events that unfold around me. I know I have projected these experiences in order to learn the lessons I need for my soul’s evolution and refinement.

Now that I am more capable of taming my mind and controlling the emotions of fear, anger and resentment, I am not experiencing those emotions reflected back to me. As a result, I naturally create more harmony and encounter less difficulty. This perspective has made my life so much smoother and more enjoyable. I’ve also become acutely aware that as I project compassion and kindness, these divine traits are reflected back to me.

This is true across the board and rarely does it fail me. When it does, I am able to see the symbolic nature of the experience and then identify my own personal emotions that, left unguarded, created conflict. Negative feelings, or trapped emotions, that still need my attention and repair are exposed. From this perspective, I am then grateful for the conflict because it revealed lessons I still need to learn. I can commit to learning those important life lessons right then and there, and avoid re-creating another experience just like it!

It’s really that easy. By opening yourself up to the world around you in this way, you are opening to spirit. Aligned with spirit, magical synchronicities will unfold as you meet opportunity at every intersection. The power is in the present moment.

Original article here

The electrons are essentially drawn across the cytochrome oxidase system by the oxygen at the positive pole of the intracellular battery. The more oxygen in the system, the stronger the pull. Breathing exercises, eating high-oxygen foods, and living in atmospherically clean, high-oxygen environments increases our overall oxygen content. The cytochrome oxidase system exists in every cell and requires electron energy to function. This electron energy comes from plant foods as well as what we directly absorb. When the food is cooked, the basic harmonic resonance pattern of the living electron energy of the live food is at least partially destroyed. Once understanding this scientific evidence, the logical step is to eat high-electron foods such as fruits, vegetables, raw nuts and seeds, and sprouted or soaked grains. People who eat refined, cooked, highly processed foods diminish the amount of solar electrons energizing the system.

The electrons are essentially drawn across the cytochrome oxidase system by the oxygen at the positive pole of the intracellular battery. The more oxygen in the system, the stronger the pull. Breathing exercises, eating high-oxygen foods, and living in atmospherically clean, high-oxygen environments increases our overall oxygen content. The cytochrome oxidase system exists in every cell and requires electron energy to function. This electron energy comes from plant foods as well as what we directly absorb. When the food is cooked, the basic harmonic resonance pattern of the living electron energy of the live food is at least partially destroyed. Once understanding this scientific evidence, the logical step is to eat high-electron foods such as fruits, vegetables, raw nuts and seeds, and sprouted or soaked grains. People who eat refined, cooked, highly processed foods diminish the amount of solar electrons energizing the system.

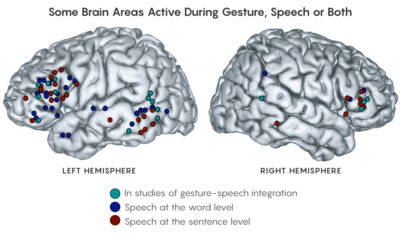

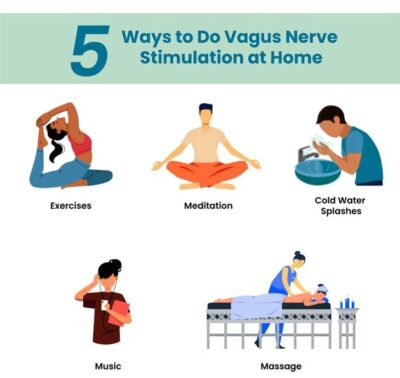

Singing, humming, chanting and even gargling can improve vagal tone because the vagus nerve controls your vocal cords and the muscles at the back of your throat, explains Dr Ravindran. A brain imaging study even found that the humming involved in the meditation chant ‘om’ reduced activity in areas of the brain associated with depression.

Singing, humming, chanting and even gargling can improve vagal tone because the vagus nerve controls your vocal cords and the muscles at the back of your throat, explains Dr Ravindran. A brain imaging study even found that the humming involved in the meditation chant ‘om’ reduced activity in areas of the brain associated with depression.

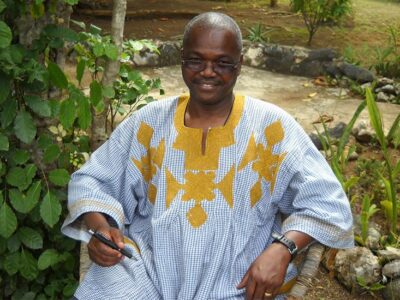

Malidoma is from a collectivist society. Born into the Dagara tribe in Burkina Faso, he is the grandson of a renowned healer, who travels around the world but is based in the U.S. Malidoma sees himself as a bridge between his culture and the United States, existing to “bring the wisdom of our people to this part of the world.” Malidoma’s “career”—he chuckles at the term—is some combination of cultural ambassador, homeopath, and sage. He travels the country doing rituals and consultations, writing books, and giving speeches. He has three master’s degrees and two doctorates from Brandeis University. Sometimes he calls himself a “shaman,” because people know what that means (sort of) and it’s similar to his title back in Burkina Faso—a titiyulo, one who “constantly inquires with other dimensions.”

Malidoma is from a collectivist society. Born into the Dagara tribe in Burkina Faso, he is the grandson of a renowned healer, who travels around the world but is based in the U.S. Malidoma sees himself as a bridge between his culture and the United States, existing to “bring the wisdom of our people to this part of the world.” Malidoma’s “career”—he chuckles at the term—is some combination of cultural ambassador, homeopath, and sage. He travels the country doing rituals and consultations, writing books, and giving speeches. He has three master’s degrees and two doctorates from Brandeis University. Sometimes he calls himself a “shaman,” because people know what that means (sort of) and it’s similar to his title back in Burkina Faso—a titiyulo, one who “constantly inquires with other dimensions.”