The chorus of the theme song for the movie Fame, performed by actress Irene Cara, includes the line “I’m gonna live forever.” Cara was, of course, singing about the posthumous longevity that fame can confer. But a literal expression of this hubris resonates in some corners of the world—especially in the technology industry. In Silicon Valley, immortality is sometimes elevated to the status of a corporeal goal. Plenty of big names in big tech have sunk funding into ventures to solve the problem of death as if it were just an upgrade to your smartphone’s operating system.

The chorus of the theme song for the movie Fame, performed by actress Irene Cara, includes the line “I’m gonna live forever.” Cara was, of course, singing about the posthumous longevity that fame can confer. But a literal expression of this hubris resonates in some corners of the world—especially in the technology industry. In Silicon Valley, immortality is sometimes elevated to the status of a corporeal goal. Plenty of big names in big tech have sunk funding into ventures to solve the problem of death as if it were just an upgrade to your smartphone’s operating system.

Yet what if death simply cannot be hacked and longevity will always have a ceiling, no matter what we do? Researchers have now taken on the question of how long we can live if, by some combination of serendipity and genetics, we do not die from cancer, heart disease or getting hit by a bus. They report that when omitting things that usually kill us, our body’s capacity to restore equilibrium to its myriad structural and metabolic systems after disruptions still fades with time. And even if we make it through life with few stressors, this incremental decline sets the maximum life span for humans at somewhere between 120 and 150 years. In the end, if the obvious hazards do not take our lives, this fundamental loss of resilience will do so, the researchers conclude in findings published in May 2021 in Nature Communications.

“They are asking the question of ‘What’s the longest life that could be lived by a human complex system if everything else went really well, and it’s in a stressor-free environment?’” says Heather Whitson, director of the Duke University Center for the Study of Aging and Human Development, who was not involved in the paper. The team’s results point to an underlying “pace of aging” that sets the limits on life span, she says.

For the study, Timothy Pyrkov, a researcher at a Singapore-based company called Gero, and his colleagues looked at this “pace of aging” in three large cohorts in the U.S., the U.K. and Russia. To evaluate deviations from stable health, they assessed changes in blood cell counts and the daily number of steps taken and analyzed them by age groups.

For both blood cell and step counts, the pattern was the same: as age increased, some factor beyond disease drove a predictable and incremental decline in the body’s ability to return blood cells or gait to a stable level after a disruption. When Pyrkov and his colleagues in Moscow and Buffalo, N.Y., used this predictable pace of decline to determine when resilience would disappear entirely, leading to death, they found a range of 120 to 150 years. (In 1997 Jeanne Calment, the oldest person on record to have ever lived, died in France at the age of 122.)

The researchers also found that with age, the body’s response to insults could increasingly range far from a stable normal, requiring more time for recovery. Whitson says that this result makes sense: A healthy young person can produce a rapid physiological response to adjust to fluctuations and restore a personal norm. But in an older person, she says, “everything is just a little bit dampened, a little slower to respond, and you can get overshoots,” such as when an illness brings on big swings in blood pressure.

The researchers also found that with age, the body’s response to insults could increasingly range far from a stable normal, requiring more time for recovery. Whitson says that this result makes sense: A healthy young person can produce a rapid physiological response to adjust to fluctuations and restore a personal norm. But in an older person, she says, “everything is just a little bit dampened, a little slower to respond, and you can get overshoots,” such as when an illness brings on big swings in blood pressure.

Measurements such as blood pressure and blood cell counts have a known healthy range, however, Whitson points out, whereas step counts are highly personal. The fact that Pyrkov and his colleagues chose a variable that is so different from blood counts and still discovered the same decline over time may suggest a real pace-of-aging factor in play across different domains.

Study co-author Peter Fedichev, who trained as a physicist and co-founded Gero, says that although most biologists would view blood cell counts and step counts as “pretty different,” the fact that both sources “paint exactly the same future” suggests that this pace-of-aging component is real.

The authors pointed to social factors that reflect the findings. “We observed a steep turn at about the age of 35 to 40 years that was quite surprising,” Pyrkov says. For example, he notes, this period is often a time when an athlete’s sports career ends, “an indication that something in physiology may really be changing at this age.”

The desire to unlock the secrets of immortality has likely been around as long as humans’ awareness of death. But a long life span is not the same as a long health span, says S. Jay Olshansky, a professor of epidemiology and biostatistics at the University of Illinois at Chicago, who was not involved in the work. “The focus shouldn’t be on living longer but on living healthier longer,” he says.

“Death is not the only thing that matters,” Whitson says. “Other things, like quality of life, start mattering more and more as people experience the loss of them.” The death modeled in this study, she says, “is the ultimate lingering death. And the question is: Can we extend life without also extending the proportion of time that people go through a frail state?”

The researchers’ “final conclusion is interesting to see,” Olshansky says. He characterizes it as “Hey, guess what? Treating diseases in the long run is not going to have the effect that you might want it to have. These fundamental biological processes of aging are going to continue.”

The idea of slowing down the aging process has drawn attention, not just from Silicon Valley types who dream about uploading their memories to computers but also from a cadre of researchers who view such interventions as a means to “compress morbidity”—to diminish illness and infirmity at the end of life to extend health span. The question of whether this will have any impact on the fundamental upper limits identified in the Nature Communications paper remains highly speculative. But some studies are being launched—testing the diabetes drug metformin, for example—with the goal of attenuating hallmark indicators of aging.

In this same vein, Fedichev and his team are not discouraged by their estimates of maximum human life span. His view is that their research marks the beginning of a longer journey. “Measuring something is the first step before producing an intervention,” Fedichev says. As he puts it, the next steps, now that the team has measured this independent pace of aging, will be to find ways to “intercept the loss of resilience.”

Original article here

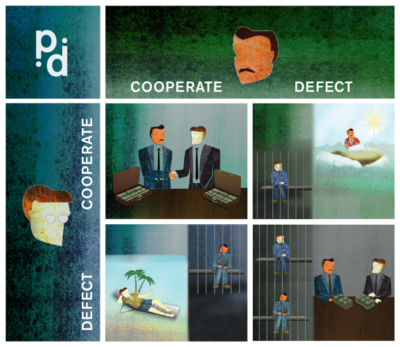

Many people cheat on taxes — no mystery there. But many people don’t, even if they wouldn’t be caught — now, that’s weird. Or is it? Psychologists are deeply perplexed by human moral behavior, because it often doesn’t seem to make any logical sense. You might think that we should just be grateful for it. But if we could understand these seemingly irrational acts, perhaps we could encourage more of them.

Many people cheat on taxes — no mystery there. But many people don’t, even if they wouldn’t be caught — now, that’s weird. Or is it? Psychologists are deeply perplexed by human moral behavior, because it often doesn’t seem to make any logical sense. You might think that we should just be grateful for it. But if we could understand these seemingly irrational acts, perhaps we could encourage more of them.

When it comes to getting people to cooperate more, Rand’s work brings good news. Our intuitions are not fixed at birth. We develop social heuristics, or rules of thumb for interpersonal behavior, based on the interactions we have. Change those interactions and you change behavior.

When it comes to getting people to cooperate more, Rand’s work brings good news. Our intuitions are not fixed at birth. We develop social heuristics, or rules of thumb for interpersonal behavior, based on the interactions we have. Change those interactions and you change behavior.

If you want to learn something about change there is no better place to look than evolution. Nothing represents a continuous and unrelenting cycle of order, disorder, and reorder on a grander scale. For long periods of time, Earth is relatively stable. Sweeping changes—warming, cooling, or an asteroid falling from space, for example—occur. These inflection points are followed by periods of disruption and chaos. Eventually, Earth, and everything on it, regains stability, but that stability is somewhere new.

If you want to learn something about change there is no better place to look than evolution. Nothing represents a continuous and unrelenting cycle of order, disorder, and reorder on a grander scale. For long periods of time, Earth is relatively stable. Sweeping changes—warming, cooling, or an asteroid falling from space, for example—occur. These inflection points are followed by periods of disruption and chaos. Eventually, Earth, and everything on it, regains stability, but that stability is somewhere new. The more you define yourself by any one activity, the more fragile you become. If that activity doesn’t go well or something changes unexpectedly, you lose a sense of who you are. But with self-complexity, you have develop multiple components to your identity.

The more you define yourself by any one activity, the more fragile you become. If that activity doesn’t go well or something changes unexpectedly, you lose a sense of who you are. But with self-complexity, you have develop multiple components to your identity.