If Socrates was the wisest person in Ancient Greece, then large language models must be the most foolish systems in the modern world.

If Socrates was the wisest person in Ancient Greece, then large language models must be the most foolish systems in the modern world.

In his Apology, Plato tells the story of how Socrates’s friend Chaerephon goes to visit the oracle at Delphi. Chaerephon asks the oracle whether there is anyone wiser than Socrates. The priestess responds that there isn’t: Socrates is the wisest of them all.

At first, Socrates seems puzzled. How could he be the wisest, when there were so many other people who were well known for their knowledge and wisdom, and yet Socrates claims that he lacks both?

He makes it his mission to solve the mystery. He goes around interrogating a series of politicians, poets, and artisans (as philosophers do). And what does he find? Socrates’ investigation reveals that those who claim to have knowledge either do not really know what they think they know, or else know far less than they proclaim to know.

Socrates is the wisest, then, because he is aware of the limits of his own knowledge. He doesn’t think he knows more than he does, and he doesn’t claim to know more than he does.

How does that compare with large language models like ChatGPT4?

In contrast to Socrates, large language models don’t know what they don’t know. These systems are not built to be truth-tracking. They are not based on empirical evidence or logic. They make statistical guesses that are very often wrong.

Large language models don’t inform users that they are making statistical guesses. They present incorrect guesses with the same confidence as they present facts. Whatever you ask, they will come up with a convincing response, and it’s never “I don’t know,” even though it should be. If you ask ChatGPT about current events, it will remind you that it only has access to information up to September 2021 and it can’t browse the internet. For almost any other kind of question, it will venture a response that will often mix facts with confabulations.

The philosopher Harry Frankfurt famously argued that bullshit is speech that is typically persuasive but is detached from a concern with the truth. Large language models are the ultimate bullshitters because they are designed to be plausible (and therefore convincing) with no regard for the truth. Bullshit doesn’t need to be false. Sometimes bullshitters describe things as they are, but if they are not aiming for the truth, what they say is still bullshit.

And bullshit is dangerous, warned Frankfurt. Bullshit is a greater threat to the truth than lies. The person who lies thinks she knows what the truth is, and is therefore concerned with the truth. She can be challenged and held accountable; her agenda can be inferred. The truth-teller and the liar play on opposite sides of the same game, as Frankfurt puts it. The bullshitter pays no attention to the game. Truth doesn’t even get confronted; it gets ignored; it becomes irrelevant.

And bullshit is dangerous, warned Frankfurt. Bullshit is a greater threat to the truth than lies. The person who lies thinks she knows what the truth is, and is therefore concerned with the truth. She can be challenged and held accountable; her agenda can be inferred. The truth-teller and the liar play on opposite sides of the same game, as Frankfurt puts it. The bullshitter pays no attention to the game. Truth doesn’t even get confronted; it gets ignored; it becomes irrelevant.

Bullshit is more dangerous the more persuasive it is, and large language models are persuasive by design on two counts. First, they have analysed enormous amounts of text, which allows them to make a statistical guess as to what is a likely appropriate response to the prompt given. In other words, it mimics the patterns that it has picked up in the texts it has gone through. Second, these systems are refined through a process of reinforcement learning from human feedback (RLHF). The reward model has been trained directly from human feedback. Humans taught it what kinds of responses they prefer. Through numerous iterations, the system learns how to satisfy human beings’ preferences, thereby becoming more and more persuasive.

As the proliferation of fake news has taught us, human beings don’t always prefer truth. Falsity is often much more attractive than bland truths. We like good, exciting stories much more than we like truth. Large language models are analogous to a nightmare student, professor, or journalist; those who, instead of acknowledging the limits of their knowledge, try to wing it by bullshitting you.

Plato’s Apology suggests that we should build AI to be more like Socrates and less like bullshitters. We shouldn’t expect tech companies to design ethically out of their own good will. Silicon Valley is well known for its bullshitting abilities, and companies can even feel compelled to bullshit to stay competitive in that environment. That companies working in a corporate bullshitting environment create bullshitting products should hardly be surprising. One of the things that the past two decades have taught us is that tech needs as much regulation as any other industry, and no industry can regulate itself. We regulate food, drugs, telecommunications, finance, transport; why wouldn’t tech be next?

Plato leaves us with a final warning. One of the lessons of his work is to beware the flaws of democracy. Athenian democracy killed Socrates. It condemned its most committed citizen, its most valuable teacher, while it allowed sophists—the bullshitters of that time—to thrive. Our democracies seem likewise vulnerable to bullshitters. In the recent past, we have made them prime ministers and presidents. And now we are fueling the power of large language models, considering using them in all walks of life—even in contexts like journalism, politics, and medicine, in which truth is vital to the health of our institutions. Is that wise?

Original article here

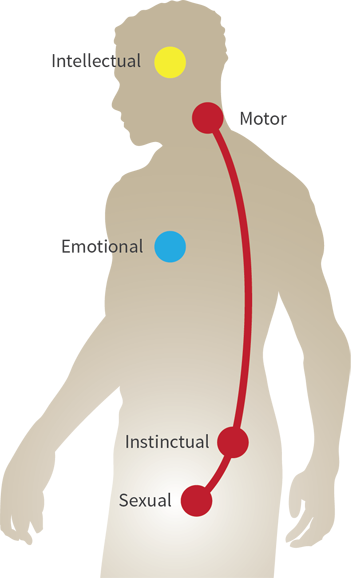

The five centers are:

The five centers are: